The accuracy of your data can make or break your business decisions. Imagine relying on outdated, inconsistent, or duplicated data—how confident would you feel about the insights derived from it? This is where effective data cleaning becomes indispensable.

In this comprehensive guide, we’ll walk you through the essential tools and strategies you need to maintain top-notch data quality. From basic tools like Excel to advanced platforms like Talend and Alteryx, we’ll explore the best solutions to help you transform your raw data into reliable assets.

- Identify Critical Data Quality Issues: Start by recognising common data problems like duplicates, outdated records, and inconsistencies, which can lead to poor business decisions.

- Choose the Right Data Cleaning Tools: Select tools like OpenRefine, Trifacta, and Talend for effective data profiling, standardisation, and cleansing to maintain accurate and reliable datasets.

- Implement a Step-by-Step Data Cleaning Process: Follow a structured approach to data cleaning, from profiling and standardising to removing duplicates and handling missing data, ensuring thorough and consistent results.

- Leverage Advanced Techniques for Complex Datasets: Use regular expressions and automated cleansing pipelines for large-scale data cleaning, enabling more efficient processing and higher data accuracy.

- Continuously Monitor and Improve Data Quality: Establish ongoing data audits and set clear KPIs for data accuracy and consistency, ensuring your data remains a reliable asset over time.

- Address Scalability and Integration Challenges: Prepare for growing data volumes and ensure seamless integration across systems to maintain consistent data quality as your business evolves.

What are the key benefits of implementing data cleaning tools?

Clean data is crucial to the success of any business, offering substantial value and reducing operational hassles such as errors in invoicing, misdirected shipments, and processing inaccuracies. Implementing effective data cleaning strategies can yield numerous benefits, leading to increased profitability and reduced operational costs for enterprises of all sizes.

Enhanced Decision Making

Making informed business decisions hinges on accurate customer data. With data volumes doubling every 12 to 18 months, the risk of errors increases. This is where data cleaning tools become invaluable. By ensuring your data is current and accurate, you can enhance your analytics and business intelligence, leading to more effective decision-making. Clean data supports successful business strategies and contributes significantly to your company’s success.

Improved Customer Acquisition Efforts

Accurate data plays a pivotal role in boosting customer acquisition activities. Clean, precise, and up-to-date data enhances marketing processes and increases returns on email and postal campaigns. Data cleaning techniques ensure the seamless management of multi-channel customer data, guaranteeing the success of your marketing efforts.

Streamlined Business Operations

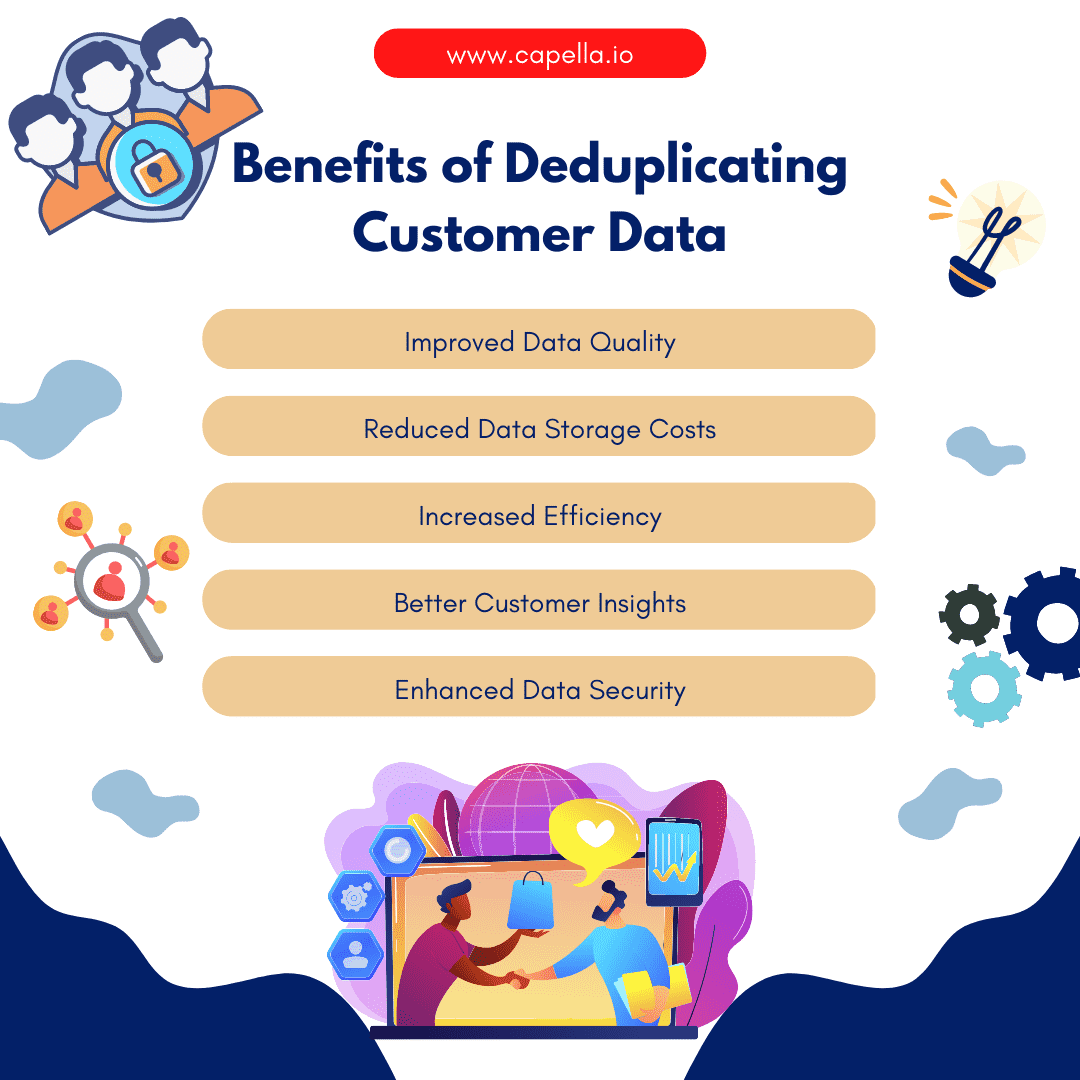

Eliminating duplicate data from your database allows your enterprise to streamline business practices and reduce costs. Other benefits include optimising job descriptions and integrating roles within the organisation. Up-to-date sales data can enhance the market performance of your products or services. Coupled with robust analytics, data cleaning strategies can help identify the optimal timing for launching new products or services.

What Matters Most?

Understanding the specific data quality issues that impact your organisation is fundamental to maintaining integrity. Prioritising data cleaning as a foundational step ensures that analyses rely on accurate information, which typically leads to improved decision-making. Additionally, fostering a culture of data governance allows us to continuously monitor and enhance data quality, empowering organisations to stay ahead in a data-driven landscape.Get In Touch

How can I ensure accurate data for effective business intelligence?

Impure data can severely hinder business intelligence, affecting operational, tactical, and strategic levels. Inefficient decision-making, wasted time and resources, flawed analyses, and missed opportunities are just a few of the consequences. These issues can undermine business competitiveness and negatively impact organisational morale and inter-departmental trust.

Significance of Data Cleaning for BI

Business intelligence begins with data acquisition, but the data collected isn’t immediately ready for analysis. It requires cleansing, validation, and, if necessary, enrichment and transformation to be effectively utilised in business intelligence projects. Data cleansing tools optimise the performance and efficiency of BI systems by reducing data volume, complexity, and redundancy.

Clean and enriched data leads to better business intelligence outcomes, including increased operational efficiency, improved customer relationship management, enhanced regulatory compliance, superior data visualisation, and more accurate diagnostic and predictive analyses. This fosters greater trust in data, which in turn unearths tactical, operational, and strategic insights, facilitating superior decision-making.

How Data Scrubbing Simplifies Data Management

Data scrubbing is essential in various data management processes, ensuring data quality and consistency across different systems. Implementing effective data cleaning strategies is crucial for maintaining high standards of data integrity.

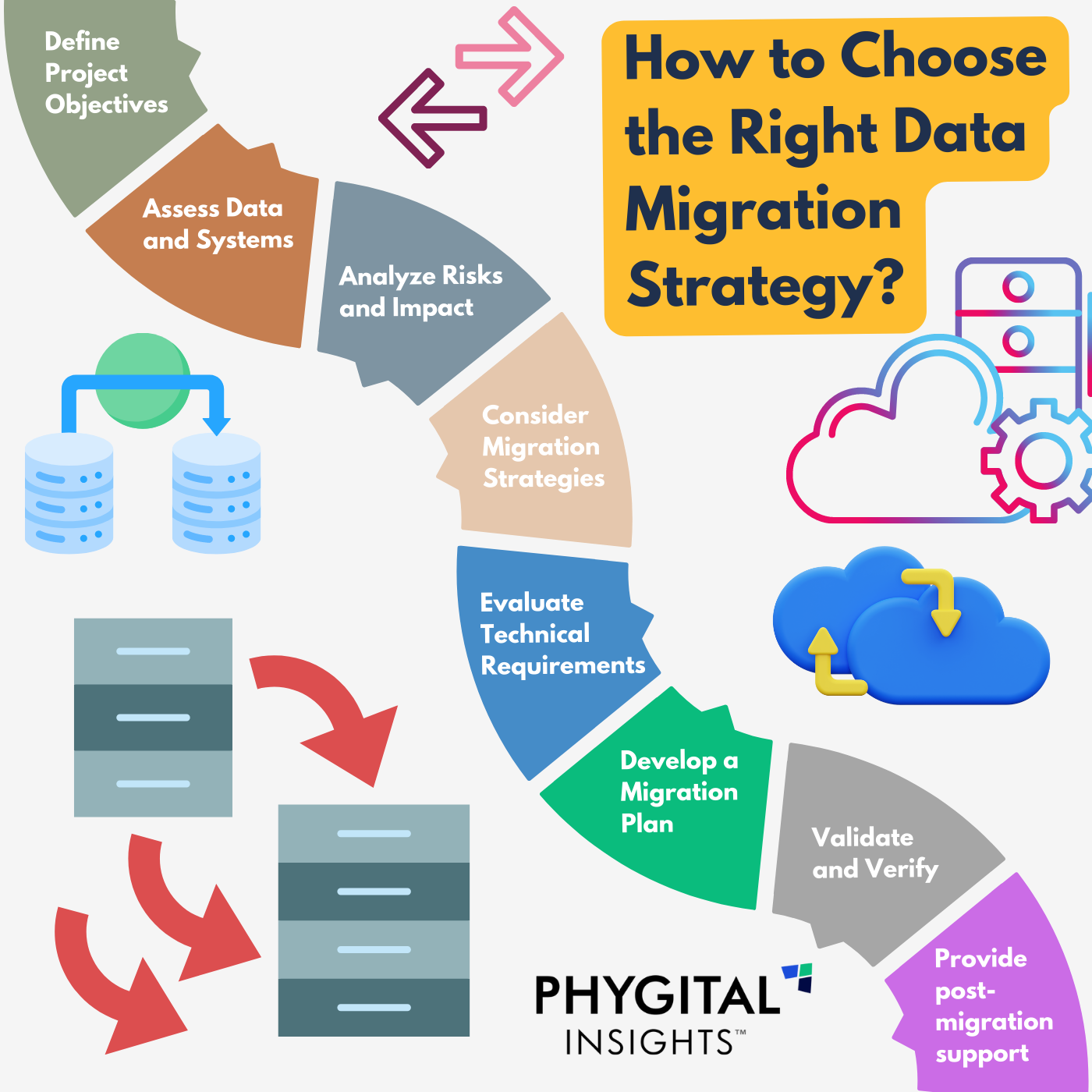

Data Integration: Data integration is a core data management process that involves consolidating data from diverse sources into a single, cohesive platform. Effective data cleaning tools standardise and format the incoming data before it is integrated into the destination system. This ensures that the data set is uniform and ready for seamless integration.

Data Migration: Data migration entails transferring files from one system to another. During this process, it is vital to maintain data quality and consistency to ensure the destination data is correctly formatted and structured without duplication. Data cleaning tools are instrumental in efficiently cleaning data during migration, thereby enhancing data quality throughout the enterprise.

Data Transformation: Before data is loaded onto its destination, it must undergo transformation to meet specific system criteria such as format and structure. Data transformation involves applying rules, filters, and expressions to the data. Utilising data scrubbing tools during this process helps cleanse the data using built-in transformations, ensuring it meets the desired operational and technical requirements.

ETL Process: The ETL (extraction, transformation, and loading) process is critical for preparing data for reporting and analysis. During this process, data scrubbing ensures that only high-quality data is used for decision-making. For instance, a retail company might receive data from multiple sources, such as CRM or ERP systems, which may contain errors or duplicate entries. Effective data cleaning techniques identify and correct these inconsistencies, converting the data into a standard format before loading it into a target database or data warehouse.

Source: Gartner

What are the common issues that affect data quality?

Poor data quality is the primary barrier to the successful, profitable use of machine learning. To leverage technologies like machine learning effectively, you must prioritise data quality. Let’s delve into some of the most common data quality issues and explore how we can address them using robust data cleaning strategies.

1. Duplicate Data

Modern organisations face an influx of data from various sources—local databases, cloud data lakes, and streaming data. Additionally, application and system silos contribute to significant duplication and overlap. For example, duplicated contact details can severely impact customer experience. Marketing campaigns suffer when some prospects are overlooked while others are contacted repeatedly. Duplicate data also skews analytical results and can produce inaccurate machine learning models.

Implementing rule-based data quality management helps monitor and control duplicate records. Predictive data quality (DQ) systems enhance this by auto-generating rules that improve continuously through machine learning. These systems identify both fuzzy and exact matches, assign likelihood scores for duplicates, and ensure continuous data quality across applications.

2. Inaccurate Data

Accurate data is vital, especially for highly regulated industries like healthcare. Recent experiences with COVID-19 highlight the critical need for high-quality data to plan appropriate responses effectively. Inaccurate data fails to provide a true picture of reality, leading to poor decision-making. If customer data is inaccurate, personalised experiences fall short, and marketing campaigns underperform.

Data inaccuracies stem from various sources, including human errors, data drift, and data decay. According to Gartner, approximately 3% of global data decays monthly, which is alarming. Over time, data quality degrades, and its integrity diminishes across different systems. While automated data management offers some solutions, dedicated data quality tools significantly enhance accuracy.

Using predictive, continuous, and self-service DQ, organisations can detect data quality issues early in the data lifecycle and address them proactively, ensuring reliable analytics.

3. Ambiguous Data

Large databases or data lakes can contain errors, even under strict supervision, especially when dealing with high-speed data streaming. Misleading column headings, formatting issues, and unnoticed spelling errors can create ambiguous data, leading to flawed reporting and analytics.

Predictive DQ systems continuously monitor data, using auto-generated rules to resolve ambiguities quickly by identifying and correcting issues as they arise. This results in high-quality data pipelines that support real-time analytics and trusted outcomes.

4. Incomplete Data

The phrase “You don’t know what you don’t know” aptly describes the challenge of incomplete data in CRM systems. Missing data can undermine the reliability and validity of information, making it difficult to trust the findings. This can lead to biases and distort the overall picture.

Incomplete data hampers marketing efforts, leading to less effective communication and irrelevant messaging. It also affects customer experiences by preventing personalised interactions. Without critical information, assessing risks, identifying trends, and developing actionable strategies becomes challenging.

5. Outdated Data

Data, much like fruit and vegetables, can go bad, though not as quickly. People frequently change jobs, emails, and phone numbers, rendering your data obsolete. Outdated data is particularly detrimental because it may appear legitimate but consumes time and resources without yielding benefits.

Ensuring High-Quality Data

Addressing these common data quality issues with effective data cleaning techniques is crucial for maintaining high standards of data integrity. By utilising advanced data cleaning tools and strategies, businesses can ensure their data remains accurate, consistent, and ready for effective use in machine learning and other analytical processes.

Source: Experian

What Are Data Quality Checks?

Data quality checks are crucial for ensuring the integrity and usability of data. These checks begin with defining quality metrics, conducting tests to identify quality issues, and correcting problems if the system supports them. Typically, these checks are defined at the attribute level to facilitate quick testing and resolution.

Common Data Quality Checks

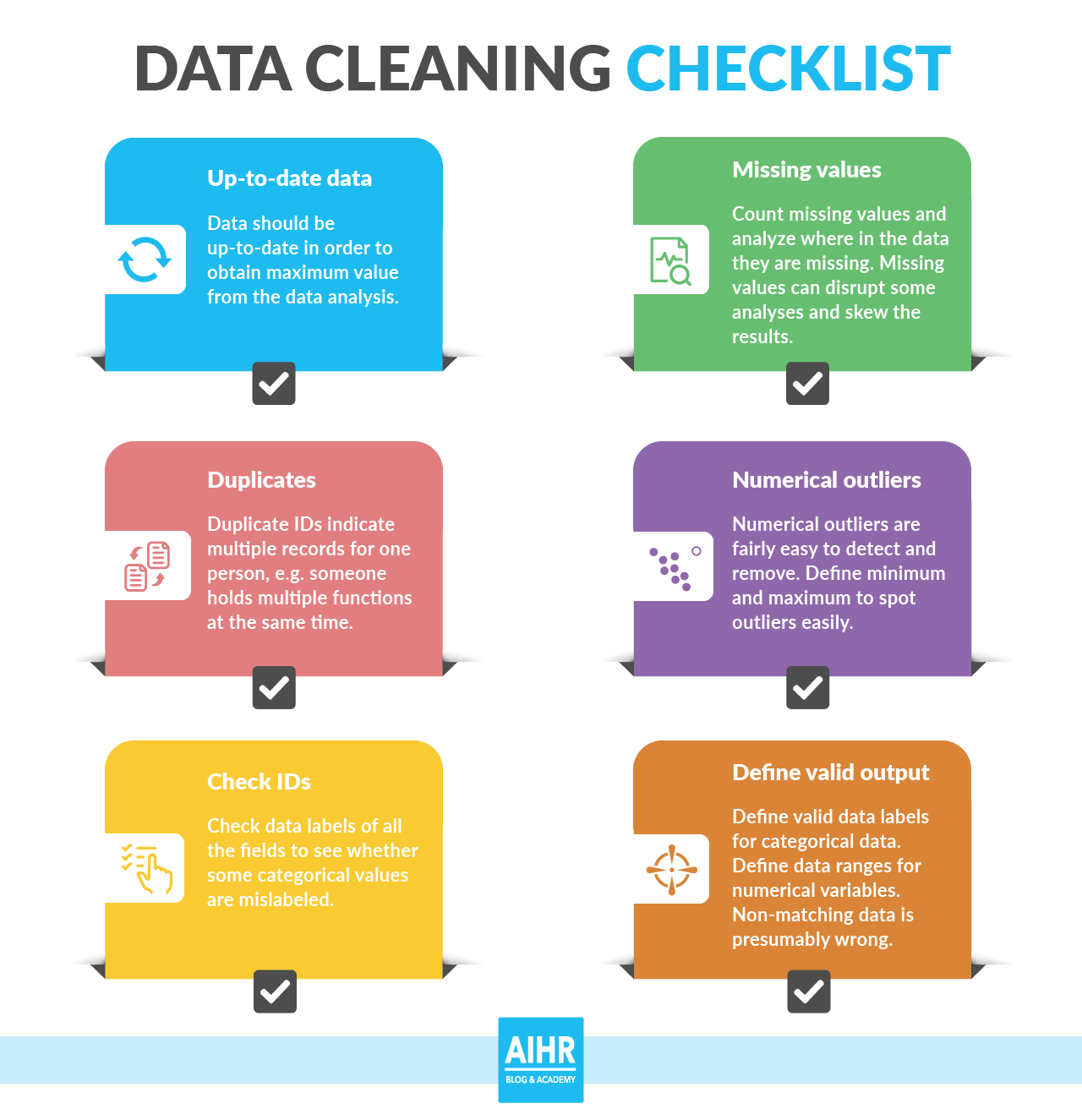

- Identifying Duplicates or Overlaps for Uniqueness Ensuring that data entries are unique is fundamental. This involves identifying and removing duplicate or overlapping records to maintain data quality and accuracy.

- Checking for Mandatory Fields, Null Values, and Missing Values It is essential to ensure all mandatory fields are filled and to identify and rectify any null or missing values. This step is critical for maintaining data completeness.

- Applying Formatting Checks for Consistency Consistent formatting is key to data usability. Formatting checks ensure that data entries adhere to the required format, enhancing readability and analysis.

- Assessing the Range of Values for Validity Validating the range of values ensures that data falls within expected parameters. This check is crucial for detecting anomalies and errors in data.

- Checking Recency or Freshness of Data Verifying how recent the data is, or when it was last updated, helps maintain data relevance and accuracy. Fresh data is more likely to be reliable and useful.

- Validating Row, Column, Conformity, and Value Checks for Integrity Ensuring the integrity of data through row and column conformity checks guarantees that the data structure is consistent and valid across the dataset.

Our Tactical Recommendations

Implementing automated data profiling is crucial for quickly spotting anomalies, enabling proactive issue resolution. Establishing a dedicated data stewardship team enhances oversight and ensures consistent adherence to quality standards. Furthermore, utilising visualisation tools to identify patterns in dirty data can reveal underlying issues, guiding targeted cleaning strategies and optimising overall data integrity.Get In Touch

How do I apply effective techniques for cleaning my data?

Effective data cleaning strategies are vital for handling missing data and ensuring accurate analysis. Understanding the types of missing values in datasets is crucial for this process.

Types of Missing Values

- Missing Completely At Random (MCAR) MCAR occurs when the probability of data being missing is the same across all observations. There is no relationship between the missing data and any other data within the dataset. This type of missing data is purely random and lacks any discernible pattern.

Example: In a survey about library books, some overdue book values might be missing due to human error in recording.

- Missing At Random (MAR) MAR happens when the probability of data being missing is related to observed data but not to the missing data itself. The pattern of missing data can be explained by other variables for which you have complete information.

Example: In a survey, ‘Age’ values might be missing for respondents who did not disclose their ‘Gender’. Here, the missingness of ‘Age’ depends on ‘Gender’, but the missing ‘Age’ values are random among those who did not disclose their ‘Gender’.

- Missing Not At Random (MNAR) MNAR occurs when the missingness of data is related to the unobserved data itself, which is not included in the dataset. This type of missing data has a specific pattern that cannot be explained by other observed variables.

Example: In a survey about library books, people with more overdue books might be less likely to respond to the survey. Thus, the number of overdue books is missing and depends on the number of books overdue.

Effective Data Cleaning Techniques for Better Data

- Standardise Capitalisation Ensuring consistent capitalisation within your data is crucial. Mixed capitalisation can create erroneous categories and cause issues during data processing. For example, “Bill” as a name versus “bill” as an invoice. To avoid these problems, standardise your data to lowercase where appropriate.

- Convert Data Types Converting data types is essential, especially for numbers and dates. Numbers often inputted as text should be converted to numerals to enable mathematical processing. Similarly, dates stored as text should be changed to a standard numerical format (e.g., “24/09/2021” instead of “September 24th 2021”).

- Fix Errors Removing errors from your data is critical. Simple typos can lead to significant issues, such as missing out on key findings or miscommunication with customers. Regular spell-checks and consistency checks can help prevent these errors. For example, ensure all currency values are standardised to one format, such as US dollars.

- Identify Conflicts in the Database The final step in the data cleaning process is conflict detection. This involves identifying and resolving conflicting data that contradicts or excludes each other. For example, a mismatch between a state and a ZIP code. If verification is not possible, such records should be marked as unreliable for further analysis.